For this exercise, you will break into three groups. Two will analyze data, and the third will do legal research. The data for this assignment is available here.

All groups: How can we encode our ideas about racial fairness into a quantitative metric? That is the fundamental question underlying this assignment and you must answer it. Don’t build models that give you answers to useless questions; each model you build must embody some justifiable concept of fairness. Many such metrics have been proposed; part of the assignment is researching and evaluating them. I’ve proposed some models that might be interesting, but I’ll be just has happy — perhaps happier — to have you tell me why these particular model formulations will not yield an interesting result. Also, you have to tell me what your modeling results mean. Is there bias? In what way, how significant is it, and what are alternate explanations? Uninterpreted results will not get a passing grade.

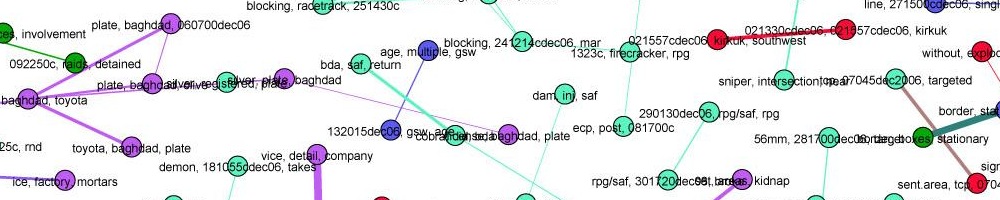

Group 1: Analyze the stop and frisk data using a multi-level linear model

This group will analyze the data along the lines of Gelman 2006. However, that paper used Bayesian estimation whereas this team will used standard linear regression. Use RStudio with the lme4 package, as described in this tutorial.

As above, you need to choose and justify quantitative definitions of fairness. Should you look at stop rate? Hit rate? And should you compare to the racial composition of each precinct? Or per-race crime rates? And if so, which crimes?

Each conceptual definition of fairness can be embodied in many different statistical models. Compare at least two different statistical formulations. For example you might end up modeling:

- hit rate vs race, per precinct

- hit rate vs race, per precinct, with precinct random effects

You must also choose the unit of analysis. Is precinct or census block a better unit? Why? Or you could compare the same model on different units.

Your final post must include a justification of the metric and model choices, and useful visualization of the results, and interpretation of both the regression coefficients and uncertainty measures (standard errors on regression coefficients, and modeling residuals.)

Group 2: Analyze the stop and frisk data using a Bayesian model

This group will analyze the data along the lines of Simoiu 2016, by adapting their code. For a tutorial on how to set up Bayesian modeling in R, see Bayesian Linear Mixed Models using STAN.

As with group 2, you must research and choose a definition of fairness, fit at least two different statistical formulations, and interpret your results including the uncertainty (posterior distributions and residuals.) Be sure to visualize your model fit, as in figure 7 of Simoiu.

I would be happy to see you replicate the threshold test of Simoiu. However, I want you to explain why the threshold test makes sense as a fairness metric. If it doesn’t make sense I want you to design a new model. Perhaps the assumption that each officer can make correctly calibrated estimates of the probability that someone is carrying contraband is unrealistic, and your model should be based on the idea that the estimates are biased and try to model that bias as latent variables.

Group 3: Research legal and policy precedent for statistical tests

This group will scour the legal literature to determine what sorts of statistical tests have been used, or could be used, to answer legal questions of discrimination. You will also research the related policy literature: what sorts of tests have governments, companies, schools etc. used to evaluate the presence or significance of discrimination. For an entry into the literature, you could do worse than to start with the works referenced by Big Data’s Disparate Impact.

This group will not be coding, but I expect you to ask not only what fairness metrics might be appropriate (as the other groups must also do) but 1) whether or not these metrics might hold up in court and 2) whether they have ever been used outside of court.

And one particular question I would like you to answer: would Simiou’s “threshold test” have legal or policy relevance?

Due Friday Nov 10