Journalism has, historically, considered itself to be about text or other unstructured content such as images, audio, and video. This week we ask the questions: how much of journalism might actually be data? How would we represent this data? Can we get structured data from unstructured data?

We start with Holovaty’s 2006 classic, A fundamental way newspaper sites need to change, which lays out the argument that the product of journalism is data, not necessarily stories. Central to this is the idea that it may not be humans consuming this data, but software that combines information for us in useful ways — like Google’s Knowledge Graph.

But to publish this kind of data, we need a standard to encode it. This gets us into the question of “what is a general way to encode human knowledge?” which has been asked by the AI community for at least 50 years. That’s why the title of this lecture is “knowledge representation.”

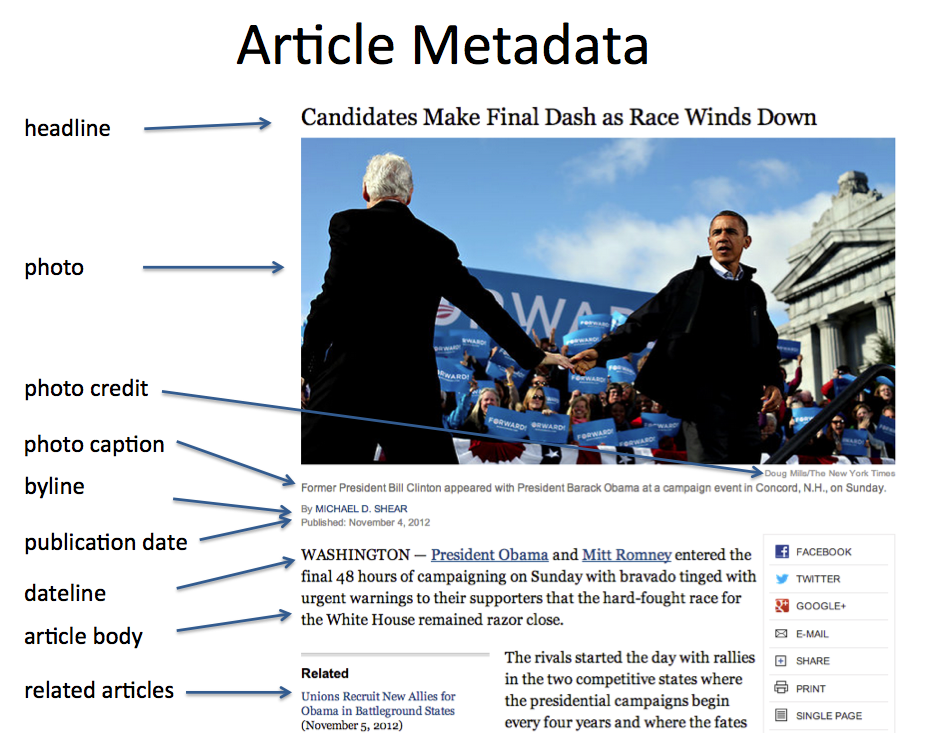

This is a hard problem, but let’s start with an easier one which has been solved: story metadata. Even without encoding the “guts” of a story as data, there is lots of useful data “attached” to a story that we don’t usually think about.

These details are important to any search engine or program that is trying to scrape the page. They might also include information on what the story is “about,” such as subject classification or a list of the entities (people, places, organizations) mentioned. There is a recent standard for encoding all of this sort of information directly within the page HTML, defined by schema.org which is a joint project of Google, Bing, and Yahoo. Take a look at the schema.org definition of a news article, and what it looks like in HTML. If you view the source of a New York Times, CNN, or Guardian article you will see these tags in use today.

In fact, every big news organization has its own internal schema, though some use it a lot more than others. The New York Times has been adding subject metadata since the early 20th century, as part of their (initially manual) indexing service. But we’d really like to be able to combine this type of information from multiple sources. This is the idea behind “linked open data” which is now W3C standard. Here’s Tim Berners-Lee describing the idea.

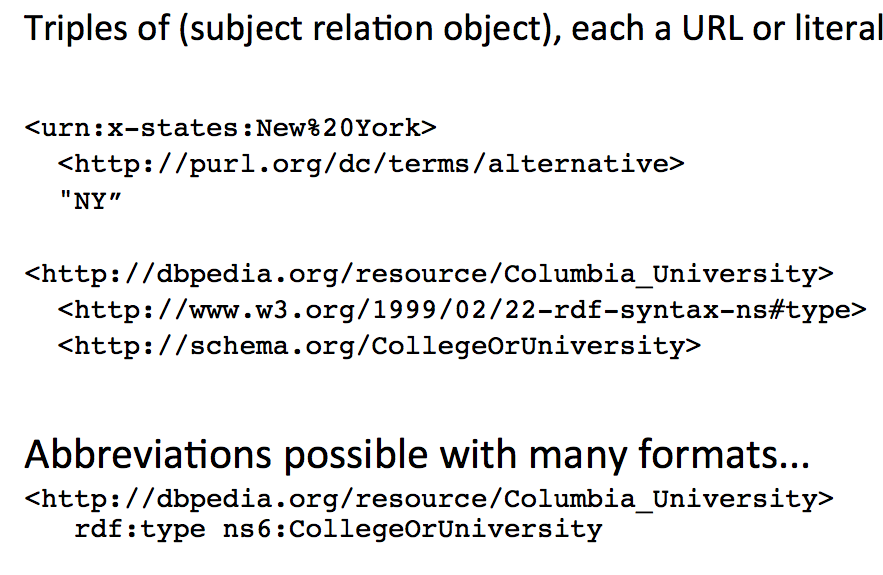

The linked data standard says each “fact” is described as a triple, in “subject relation object” form. Each of these three items is in turn either a literal constant, or a URL. Linked data is linked because it’s easy to refer to someone else’s objects by their URL. A single triple is equivalent to the proposition relation(subject,object) in mathematical logic. A database of triples is also called a “triplestore.”

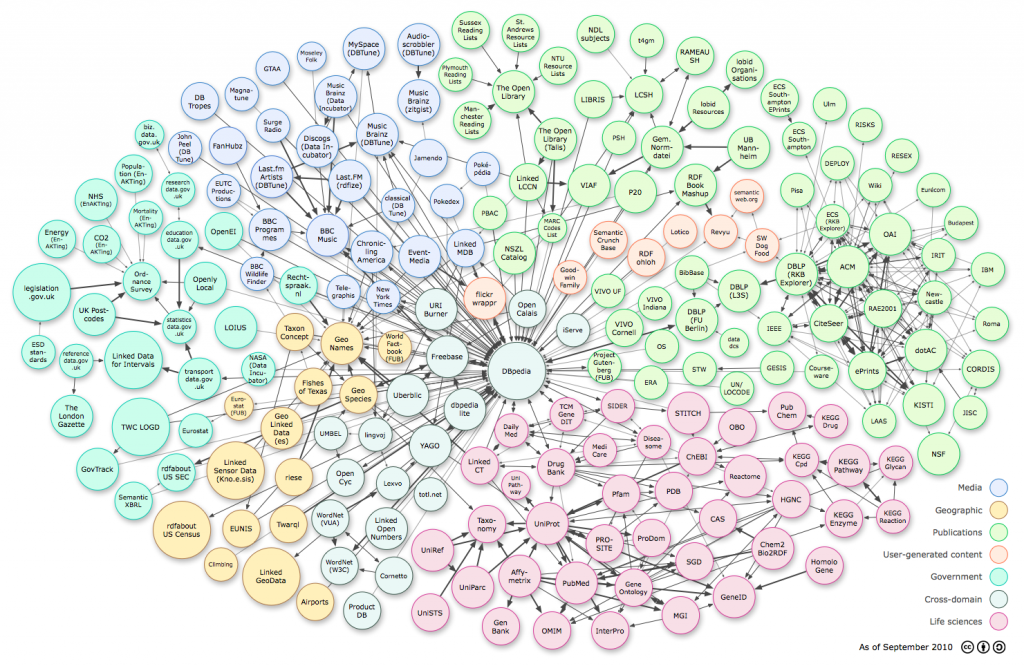

There are many sites that already support this type of data. The W3C standard for expressing triples is an XML-based format called RDF, but there is also a much simpler JSON encoding of linked data. Here is what the “linked data cloud” looked like in 2010; it’s much bigger now and no one has mapped it recently.

The arrows indicate that one database references objects in the other. You will notice something called DBPedia at the center of the cloud. This is data derived from all those “infoboxes” on the right side of Wikipedia articles, and it has become the de-facto common language for many kinds of linked data.

Not only can one publisher refer to the objects of another publisher, but the standardized owl:sameAs relation can be used to equate one publisher’s object to a DBPedia object, or anyone else’s object. This expression of equivalence is an important mechanism that allows interoperability between different publishers. (As I mentioned above, every relation is actually a URL, so owl:sameAs is more fully known as http://www.w3.org/2002/07/owl#sameAs, but the syntax of many linked data formats allow abbreviations in many cases.)

DBPedia is vast and contains entries on many objects. If you go to http://dbpedia.org/page/Columbia_University_Graduate_School_of_Journalism you will see everything that DBPedia knows about Columbia Journalism School, represented as triples (the subject of every triple on this page is the school, so it’s implicit here.) If you go to http://dbpedia.org/data/Columbia_University_Graduate_School_of_Journalism.json you will get the same information in machine-readable format. Another important database is GeoNames, which contains machine readable information on millions of geographical entities worldwide — not just their coordinates but their shapes, containment (Paris is in France), and adjacencies. The New York Times also publishes a subset of their ontology as linked open data, including owl:sameAs relations that map their entities to DBPedia entities (example).

So what can we actually do with all of this? In theory, we can combine propositions from multiple publishers to do inference. So if database A says Alice is Bob’s brother, and database B says Alice is Mary’s mother, then we can infer that Bob is Mary’s uncle. Except that — as decades of AI research has shown — propositional inference is brittle. It’s terrible at common sense, exceptions, etc.

Perhaps the most interesting real-world application of knowledge representation is general question answering. Much like typing a question into a search engine, we allow the user to ask questions in human language and expect the computer to give us the right answer. The state of the art in this area is the DeepQA system from IBM, which competed and won on Jeopardy. Their system uses a hybrid approach, with several hundred different types of statistical and propositional based reasoning modules, and terabytes of knowledge both in unstructured and structured form. The right module is selected at run time based on a machine learning model that tries to predict what approach will give the correct answer for any given question. DeepQA uses massive triplestores of information, but they only contain a proposition giving the answer for about 3% of all questions. This doesn’t mean that linked data and its propositional knowledge is useless, just that it’s not going to be the basis of “general artificial intelligence” software. In fact linked data is already in wide use, but in specialized applications.

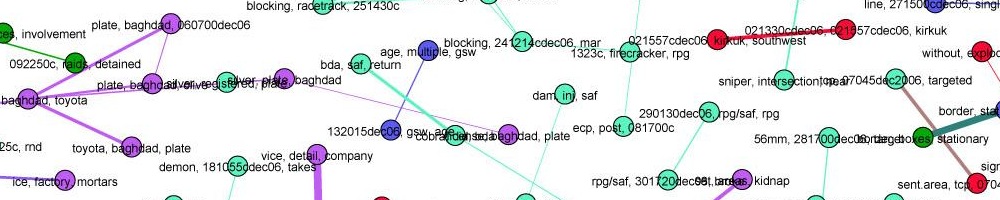

Finally, we looked at the problem of extracting propositions from text. The Reverb algorithm (in your readings) gives a taste of the challenges involved here, and you can search their database of facts extracted from 500 million web pages. A big part of proposition extraction is named entity recognition (NER). The best open implementation is probably the Stanford NER library, but the Reuters OpenCalais service performs a lot better, and you will use if for assignment 3. Google has pushed the state of the art in both NER and proposition extraction as part of their Knowledge Graph which extracts structured information from the entire web.

Your readings were:

- A fundamental way newspaper websites need to change, Adrian Holovaty

- The next web of open, linked data – Tim Berners-Lee TED talk

- Identifying Relations for Open Information Extraction, Fader, Soderland, and Etzioni (Reverb algorithm)

- Standards-based journalism in a semantic economy, Xark

- What the semantic web can represent – Tim Berners-Lee

- Building Watson: an overview of the DeepQA project

- Can an algorithm write a better story than a reporter? Wired/ 2012.