The course is a hands-on, research-level introduction to the areas of computer science that have a direct relevance to journalism, and the broader project of producing an informed and engaged public. We study two big ideas: the application of computation to produce journalism (such as data science for investigative reporting), and journalism about areas that involve computation (such as the analysis of credit scoring algorithms.)

Alon the way we will touch on many topics: information recommendation systems but also filter bubbles, principles of statistical analysis but also the human processes which generate data, network analysis and its role in investigative journalism, visualization techniques and the cognitive effects involved in viewing a visualization.

Assignments will require programming in Python, but the emphasis will be on clearly articulating the connection between the algorithmic and the editorial.

Research-level computer science material will be discussed in class, but the emphasis will be on understanding the capabilities and limitations of this technology. Students with a CS background will have opportunity for algorithmic exploration and innovation, however the primary goal of the course is thoughtful, application-oriented research and design.

Format of the class, grading and assignments.

This is a fourteen week, six point course for CS & journalism dual degree students. (It is a three point course for cross-listed students, who also do not have to complete the final project.) The class is conducted in a seminar format. Assigned readings and computational techniques will form the basis of class discussion. The course will be graded as follows:

- Assignments: 40%. There will be five homework assignments.

- Final project 40%: Dual students will be complete a medium-ish final project (others will have this 40% from assignments)

- Class participation: 20%

Assignments will involve experimentation with fundamental computational techniques. Some assignments will require intermediate level coding in Python, but the emphasis will be on thoughtful and critical analysis. As this is a journalism course, you will be expected to write clearly. The final project can be either a piece of software (especially a plugin or extension to an existing tool), a data-driven story, or a research paper on a relevant technique.

Dual degree students will also have a final project. This will be either a research paper, a computationally-driven story, or a software project. The class is conducted on pass/fail basis for journalism students, in line with the journalism school’s grading system. Students from other departments will receive a letter grade.

Week 1: Introduction and Clustering – 9/8

Slides.

First we ask: where do computer science and journalism intersect? CS techniques can help journalism in two main ways: using computation to do journalism, and doing journalism about computation. We’ll spend most of our time on the former: data-driven reporting, story presentation, information filtering, and effect tracking. Then we jump right into clustering and the document vector space model, which we’ll need to study filtering.

References

- Computational Journalism, Cohen, Turner, Hamilton

- TF-IDF is about what matters, Aaron Schumacher

- Introduction to Information Retrieval Chapter 6, Scoring, Term Weighting, and The Vector Space Model, Manning, Raghavan, and Schütze.

- How ProPublica’s Message Machine reverse engineers political microtargeting, Jeff Larson

Viewed in class

Week 2: Filtering Algorithms – 9/15

Slides.

The filtering algorithms we will discuss this week are used in just about everything: search engines, document set analysis, figuring out when two different articles are about the same story, finding trending topics. The main topics are matrix factorization, probabilistic topic modeling (ala LDA) and more general plate-notation graphical models, and word embeddings. Bringing it to practice we will look at Columbia Newsblaster (a precursor to Google News) and the New York Times recommendation engine.

Required

References

Discussed in class

Assignment: LDA analysis of State of the Union speeches.

Week 3: Filters as Editors – 9/22

Slides.

We’ve studied filtering algorithms, but how are they used in practice — and how should they be? We will study the details of several algorithmic filtering approaches used by social networks, and effects such as polarization and filter bubbles.

Readings

References

Viewed in class

Week 4: Computational Journalism Platforms – 9/29

Slides.

We introduce the Overview document mining system and the Computational Journalism Workbench. Then we develop pitches for final projects, which may include writing plugins for these systems.

Guest Speaker: Alex Spangher, New York Times

Readings

References

Assignment – Design a filtering algorithm for an information source of your choosing

Week 5: Quantification, Counting, and Statistics – 10/6

Slides.

Every journalist needs a basic grasp of statistics. Not t-tests and all of that, but more grounded and more practical. How do we know we’re measuring the right thing? Why are we doing stats at all? Then a journalism oriented tutorial on the fundamental ideas of probability, conditional probability, and Bayes’ theorem.

Required:

Recommended

No class 10/13

Week 6: Inference – 10/20

Slides.

There is a long history of fields grappling with the problem of determining truth in the face of uncertainty, from statistics to intelligence analysis. We’ll start with statistics, the notion of randomness that is so crucial to the idea of statistical significance. Then we’ll talk about determining causality, p-hacking and reproducibility, and the more qualitative, closer-to-real-world method of analysis of competing hypothesis.

Required

Recommended

Viewed in class

Week 7: Discrimination and Algorithmic Accountability – 10/27

Slides.

Two topics this week. Discrimination is an important topic for reporters and for society, but analyzing discrimination data is more subtle and complex than it might seem. Algorithmic accountability is the study of the algorithms that regulate society, from high frequency trading to predictive policing. We’re at their mercy, unless we learn how to investigate them.

Required

References

- How Algorithms Shape our World, Kevin Slavin

- How the Journal Tested Prices and Deals Online, Jeremy Singer-Vine, Ashkan Soltani and Jennifer Valentino-DeVries

- How Big Data is Unfair, Moritz Hardt

- Testing for Racial Discrimination in Police Searches of Motor Vehicles, Simoiu et al

- Sex Bias in Graduate Admissions: Data from Berkeley, P. J. Bickel, E. A. Hammel, J. W. O’Connell

- How We Analyzed the COMPAS Recidivism Algorithm, Larson et al.

- Big Data’s Disparate Impact, Barocas and Selbst

Assignment: Analyze NYPD stop and frisk data for racial discrimination.

No class 11/3

Week 8: Visualization, Network Analysis – 11/3

Slides.

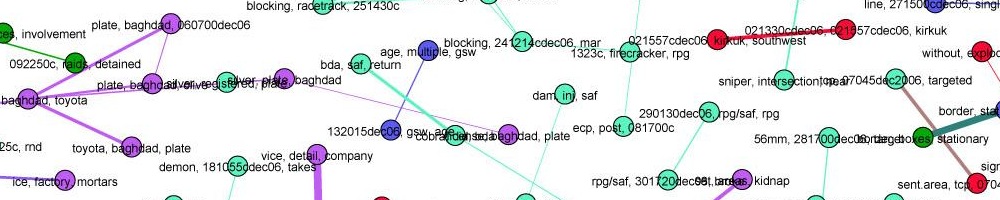

Visualization helps people interpret information. We’ll look at design principles from user experience considerations, graphic design, and the study of the human visual system. Network analysis (aka social network analysis, link analysis) is a promising and popular technique for uncovering relationships between diverse individuals and organizations. It is widely used in intelligence and law enforcement, and inreasingly in journalism.

Readings

- Visualization, Tamara Munzner

- Network Analysis in Journalism: Practices and Possibilities, Stray

References

Examples:

Assignment: Compare different centrality metrics in Gephi.

Week 9 Knowledge representation – 11/10

Slides.

How can journalism benefit from encoding knowledge in some formal system? Is journalism in the media business or the data business? And could we use knowledge bases and inferential engines to do journalism better? This gets us deep into the issue of how knowledge is represented in a computer. We’ll look at traditional databases vs. linked data and graph databases, entity and relation detection from unstructured text, and traditional both probabilistic and propositional formalisms. Plus: NLP in investigative journalism, automated fact checking, and more.

Readings

References

Viewed in class

Assignment: Text enrichment experiments using OpenCalais entity extraction.

Week 10: Truth and Trust – 11/17

We went through The Ethics of Persuasion slides.

Computational propaganda. Structure of information operations. Fake news detection and tagging. Credibility schema. Systems to detect and combat abuse and harassment.

Speaker: Ed Bice, Meedan

Readings

References

No class 11/24

Week 11: Privacy, Security, and Censorship – 12/1

Slides.

Who is watching our online activities? Who gets to access to all of this mass intelligence, and what does the ability to survey everything all the time mean both practically and ethically for journalism? In this lecture we cover both the basics of digital security, and methods to deal with specific journalistic situations — anonymous sources, handling leaks, border crossings, and so on.

Readings

References

Week 12: Tracking flow and impact – 12/8

How does information flow in the online ecosystem? What happens to a story after it’s published? How do items spread through social networks? We’re just beginning to be able to track ideas as they move through the network, but it’s still very difficult to really measure the public interest impact of journalism.

Readings

References

- Meme-tracking and the Dynamics of the News Cycle, Leskovec et al.

- NewsLynx: A Tool for Newsroom Impact Measurement, Michael Keller, Brian Abelson

- The role of social networks in information diffusion, Eytan Bakshy et al.

- Defining Moments in Risk Communication Research: 1996–2005, Katherine McComas

- Chain Letters and Evolutionary Histories, Charles H. Bennett, Ming Li and Bin Ma

- Competition among memes in a world with limited attention, Weng et al.

- Zach Seward, In the news cycle, memes spread more like a heartbeat than a virus

- How hidden networks orchestrated Stop Kony 2012, Gilad Lotan

Week 13: Final Project Presentations – 12/15