The text processing algorithms we discussed this week are used in just about everything: search engines, document set visualization, figuring out when two different articles are about the same story, finding trending topics. The vector space document model is fundamental to algorithmic handling of news content, and we will need it to understand how just about every filtering and personalization system works.

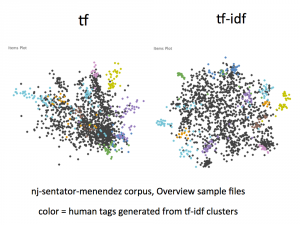

Here’s a slide I made showing the difference between TF and TF-IDF on the structure of the document vector space. I’m sure someone has tried this before, but It’s the first such comparison that I’ve seen, and matches the theoretical arguments put forth by Salton in 1975.

Here were the readings (from the syllabus):

- Online Natural Language Processing Course, Stanford University

- Week 7: Information Retrieval, Term-Document Incidence Matrix

- Week 7: Ranked Information Retrieval, Introducing Ranked Retrieval

- Week 7: Ranked Information Retrieval, Term Frequency Weighting

- Week 7: Ranked Information Retrieval, Inverse Document Frequency Weighting

- Week 7: Ranked Information Retrieval, TF-IDF weighting

- Probabilistic Topic Models, David M. Blei

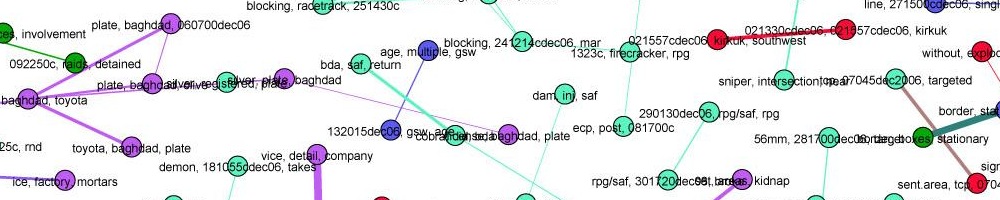

- A full-text visualization of the Iraq war logs, Jonathan Stray

- Latent Semantic Analysis, Peter Wiemer-Hastings

- Probabilistic Latent Semantic Indexing, Hofmann

- Introduction to Information Retrieval Chapter 6, Scoring, Term Weighting, and The Vector Space Model, Manning, Raghavan, and Schütze.

Jeff Larson of ProPublica came and spoke to us about his work on Message Machine, which uses tf-idf to cluster and “reverse engineer” the campaign emails from Obama and Romney.

I mentioned that it’s possible to do better than TF-IDF weighting but not hugely, and that using bigrams as features doesn’t help much. My personal favorite research on term-weighting uses a genetic algorithm to evolve optimal formulas. (All of these discussions of “best” are measured with respect to a set of documents manually tagged as relevant/not relevant to specific test queries, which matches search engine use but may not be what we want for specific journalistic inquiries.)

Finally, you may be interested to know that TF-IDF + LSA + cosine similarity has a correlation of 0.6 with asking humans to rate the similarity of two documents on a linear scale, which is just as strongly as different human estimates correlate with each other. In a certain sense, it is not possible to create a better document similarity algorithm because there isn’t a consistent human definition of such a concept. However, these experiments were done on short news articles and it might be possible to do very much better over specialized domains.

This week we also started Assignment 1.